Introduction

In the world of networking, latency plays a crucial role in determining the efficiency and responsiveness of communication between devices. For businesses and organizations relying on high-speed networks, minimizing latency is paramount. One of the key factors that affect latency is the switching method used in network switches. Understanding the different switching methods and how they impact latency can be vital in optimizing network performance. This article will explore the various switching methods used in modern network devices, focusing on which switching method offers the lowest latency. We will discuss each method’s characteristics, performance metrics, and real-world applications, with a particular emphasis on how these methods are used in different network environments. Whether you are a network professional, an IT student, or preparing for certification exams, this blog will help you gain a deeper understanding of the relationship between switching methods and network latency.

What is Latency in Networking?

Before delving into the different switching methods, it’s important to understand what latency means in the context of networking. Latency refers to the time it takes for data to travel from the source to the destination. It is typically measured in milliseconds (ms) and can be affected by multiple factors, including the network medium, routing paths, and the type of network devices used. In the context of switching, latency is impacted by how quickly data can be forwarded from one port to another. This is especially important in networks where speed and real-time data transmission are critical, such as video streaming, online gaming, and VoIP communications.

Different Switching Methods and Their Impact on Latency

Network switches are designed to forward data packets between devices on a local area network (LAN). The way they handle these data packets can have a significant impact on latency. There are several switching methods employed in modern network switches, and each of these methods impacts the overall latency in different ways. The most common switching methods include:

-

Store-and-Forward Switching

-

Cut-Through Switching

-

Fragment-Free Switching

-

Adaptive Switching

Let’s take a closer look at each of these methods.

1. Store-and-Forward Switching

Store-and-forward is one of the oldest and most traditional switching methods. In this method, the switch receives the entire data frame before it forwards it to the destination port. The switch checks the integrity of the frame and performs an error check, typically using the Cyclic Redundancy Check (CRC) to ensure that the data is not corrupted.

Latency in Store-and-Forward Switching:

The latency in store-and-forward switching is higher compared to other methods because the switch waits for the entire frame to be received before it begins forwarding the data. While this ensures that corrupted frames are not transmitted, the delay in receiving and forwarding data increases the overall latency. In high-traffic networks or environments where low-latency communication is critical, store-and-forward switching can be a bottleneck. This method is typically used in situations where data integrity is prioritized over speed.

2. Cut-Through Switching

Cut-through switching offers a significant reduction in latency when compared to store-and-forward switching. In this method, the switch begins forwarding the frame to the destination port as soon as it receives the destination address. This means that the switch does not wait for the entire frame to arrive before forwarding it. As soon as the destination address is received and processed, the switch immediately begins forwarding the data.

Latency in Cut-Through Switching:

The primary advantage of cut-through switching is its low latency. Since the switch does not need to wait for the entire frame to arrive, it can forward the data almost immediately after receiving the destination address. This makes cut-through switching ideal for real-time applications, such as video conferencing, VoIP, and online gaming, where low latency is crucial. However, while cut-through switching reduces latency, it comes at the cost of potential data errors. Since the switch does not perform error checking, it may forward corrupted frames. This trade-off between speed and data integrity must be considered depending on the network’s requirements.

3. Fragment-Free Switching

Fragment-free switching is a hybrid approach that combines the benefits of both store-and-forward and cut-through switching. In this method, the switch begins forwarding the frame as soon as it receives the first 64 bytes, which is typically enough to determine if the frame is a fragment. The switch checks if the first part of the frame contains any errors before forwarding it.

Latency in Fragment-Free Switching:

Fragment-free switching provides a middle ground between the high latency of store-and-forward switching and the low latency of cut-through switching. By checking the first 64 bytes of the frame, it reduces the chances of forwarding corrupted frames while still providing faster forwarding compared to store-and-forward. The latency in fragment-free switching is lower than in store-and-forward but higher than in cut-through. This method is often used in networks that need a balance between low latency and data integrity.

4. Adaptive Switching

Adaptive switching is a dynamic method that combines the best features of store-and-forward, cut-through, and fragment-free switching. In this method, the switch can automatically choose the most appropriate switching method based on network conditions, such as traffic load and the presence of errors.

Latency in Adaptive Switching:

The latency in adaptive switching can vary depending on the method chosen by the switch at any given time. During low-traffic periods or when data integrity is less of a concern, the switch may use cut-through switching to minimize latency. When the network experiences high traffic or error rates, the switch may switch to store-and-forward or fragment-free switching to ensure data integrity. Adaptive switching is particularly useful in environments where traffic conditions are constantly changing, and the network needs to adjust to maintain optimal performance.

Which Switching Method Offers the Lowest Latency?

Among the different switching methods, cut-through switching is generally regarded as the method with the lowest latency. This is because cut-through switching starts forwarding data as soon as the destination address is received, without waiting for the entire frame. This eliminates the delay introduced by waiting for the entire frame to be received, as seen in store-and-forward switching. However, it’s important to note that while cut-through switching offers the lowest latency, it does not provide error checking. This means that corrupted frames may be forwarded, which can result in data errors. In environments where data integrity is crucial, other methods like store-and-forward or fragment-free may be more appropriate, even though they introduce higher latency.

Real-World Applications of Low-Latency Switching

In today’s fast-paced world, low-latency communication is essential for many applications. Let’s take a look at some of the real-world scenarios where low-latency switching is crucial:

-

VoIP (Voice over IP): VoIP applications require real-time communication, and high latency can lead to poor voice quality, delays, and dropped calls. Cut-through switching is commonly used in VoIP networks to minimize latency and ensure clear communication.

-

Online Gaming: Online gaming, especially in fast-paced, real-time multiplayer games, requires minimal latency to ensure smooth gameplay. Low-latency switching methods like cut-through are often employed to reduce lag and improve the gaming experience.

-

Video Conferencing: Video conferencing tools like Zoom, Microsoft Teams, and Google Meet rely on low-latency communication to provide a seamless experience. Cut-through switching is often used in these networks to ensure minimal delay in video and audio transmission.

-

Financial Trading: In high-frequency trading environments, even microseconds of delay can result in significant financial losses. Low-latency switching methods are essential in these environments to ensure that trades are executed as quickly as possible.

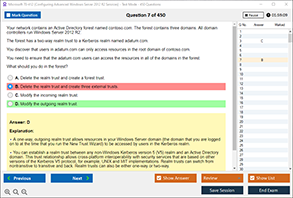

Free Sample Questions

Question 1: Which switching method has the lowest latency?

a) Store-and-Forward

b) Cut-Through

c) Fragment-Free

d) Adaptive

Answer: b) Cut-Through

Question 2: What is the primary disadvantage of using cut-through switching?

a) High latency

b) No error checking

c) High cost

d) High power consumption

Answer: b) No error checking

Question 3: In which scenario would adaptive switching be most useful?

a) Low-traffic environments

b) High-frequency trading

c) Real-time applications with fluctuating network conditions

d) Network with minimal data integrity concerns

Answer: c) Real-time applications with fluctuating network conditions

Conclusion

When it comes to reducing latency in network communication, cut-through switching is the method that offers the lowest latency. By forwarding data as soon as the destination address is received, it minimizes delays and ensures real-time performance. However, this speed comes at the cost of data integrity, as corrupted frames may be forwarded without error checking. For applications that demand high-speed communication, such as VoIP, online gaming, and financial trading, cut-through switching is often the best choice. On the other hand, for environments where data integrity is more critical, methods like store-and-forward or fragment-free may be more suitable. At DumpsQueen, we understand the importance of efficient networking for certification and real-world applications. Whether you are preparing for your CCNA exam or working in a network environment that requires low-latency communication, it is crucial to understand how switching methods impact performance. Make sure to review and practice with relevant study materials to ensure that you’re equipped with the knowledge to handle different network scenarios effectively.